799 Downloads Updated 1 year ago

Large World Model is an open-source model trained from LLaMA-2 on a subset of Books3 filtered data

7b

ollama run ifioravanti/lwm:7b-1m-text-chat-q5_k_m

Details

Readme

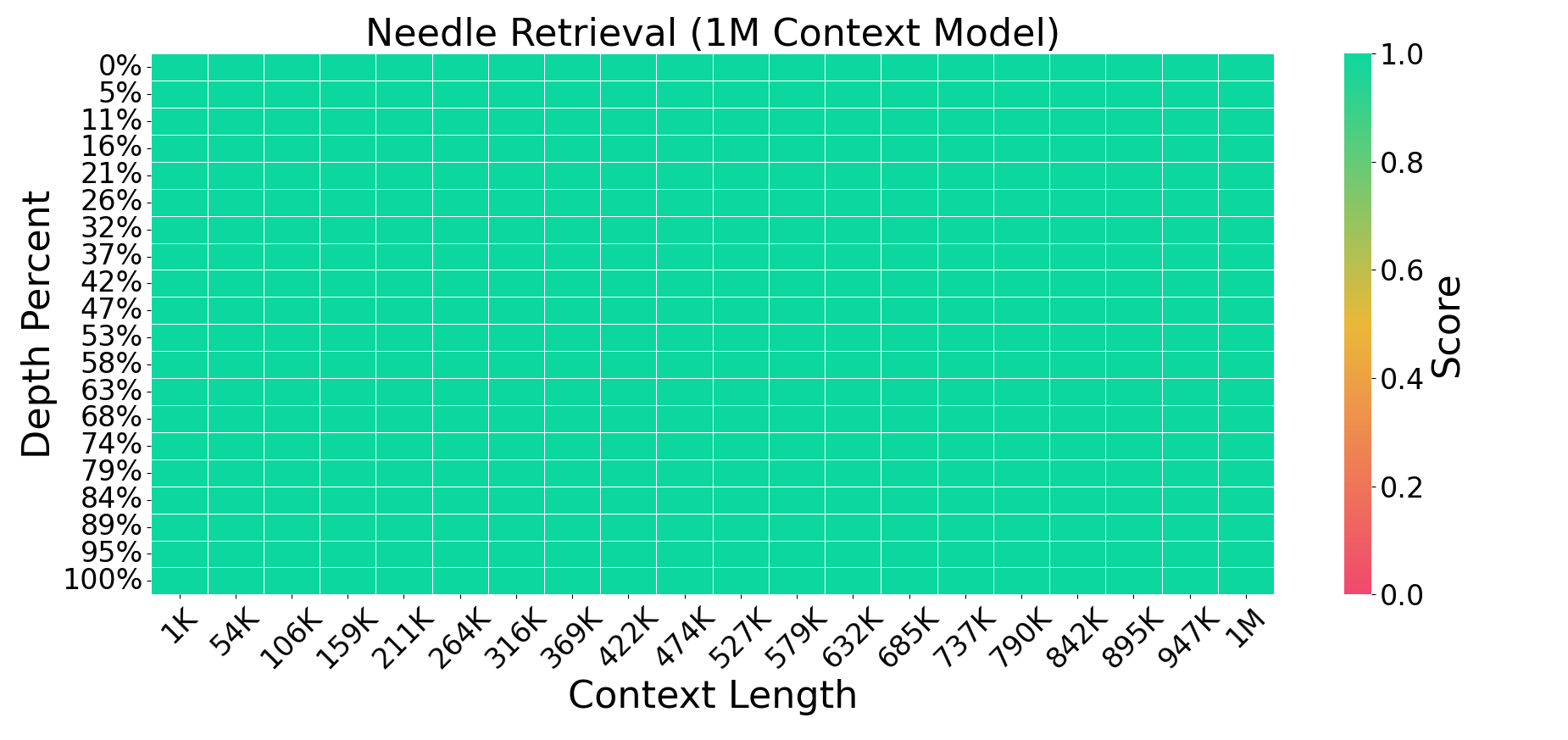

A family of 7B parameter models capable of processing long text documents (LWM-Text, LWM-Text-Chat) of over 1M tokens.

CLI

ollama run ifioravanti/lwm

API

Example:

curl -X POST http://localhost:11434/api/generate -d '{

"model": "ifioravanti/lwm",

"prompt": "Here is a story about llamas eating grass"

}'

Memory requirements

7b models generally require at least 8GB of RAM but due to 1M context size this requires a ton of memory depending on the context passed