17 Downloads Updated 1 year ago

MIT Licensed 400 Billion Parameter model by Jabir Project

ollama run haghiri/jabir-400b:q4_k

Details

Updated 1 year ago

1 year ago

42ed1e83d1cf · 149GB ·

Readme

Jabir 400B

Introduction

This model is part of Jabir Project by Muhammadreza Haghiri as a large and well trained model on multiple languages with a goal of democratizing AI.

After the success of other Haghiri’s project, Mann-E he gathered a team to work on a large language model which has a good understanding of Persian language (alongside other languages).

Jabir is available in 405 billion parameters, based on LLaMA 3.1 and it wasn’t openly available until now. The project will continue to improve and this repository will be updated as well.

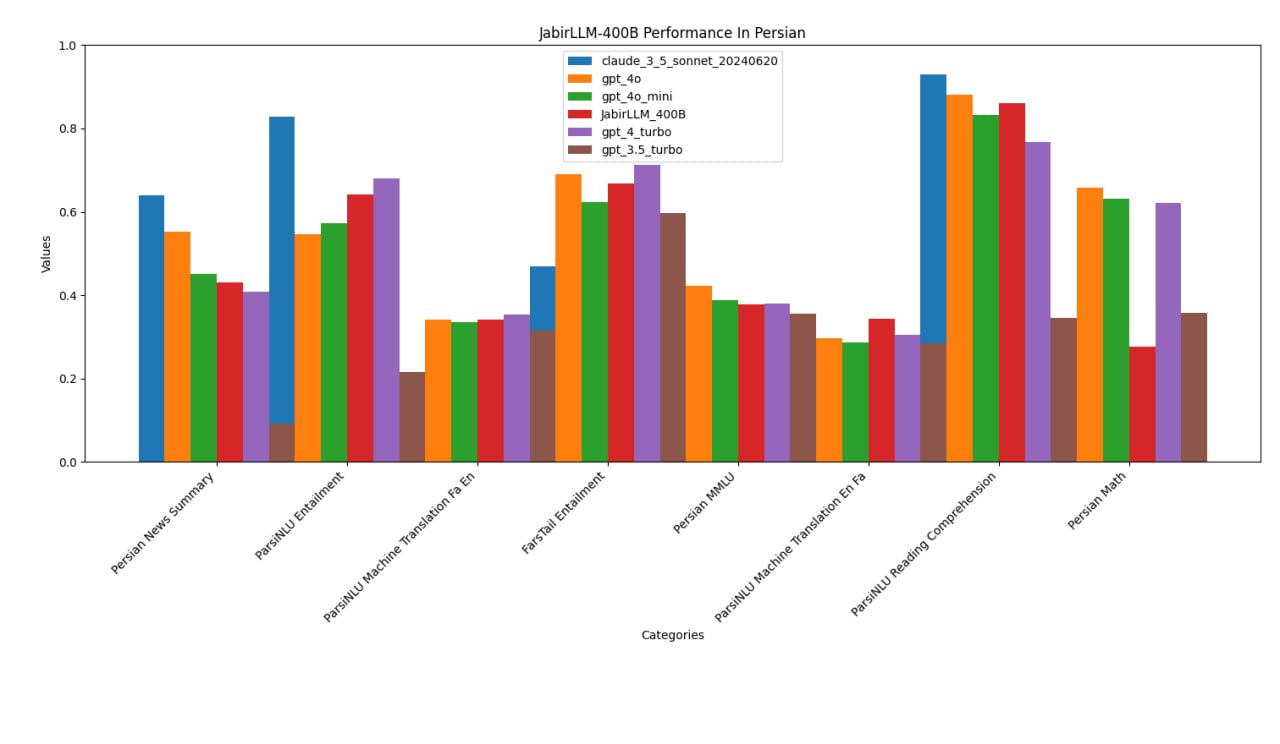

Benchmarks

License

This model has been released under MIT License.