Reduce hallucinations with Bespoke-Minicheck

September 18, 2024

Bespoke-Minicheck is a new grounded factuality checking model developed by Bespoke Labs that is now available in Ollama. It can fact-check responses generated by other models to detect and reduce hallucinations.

How it works

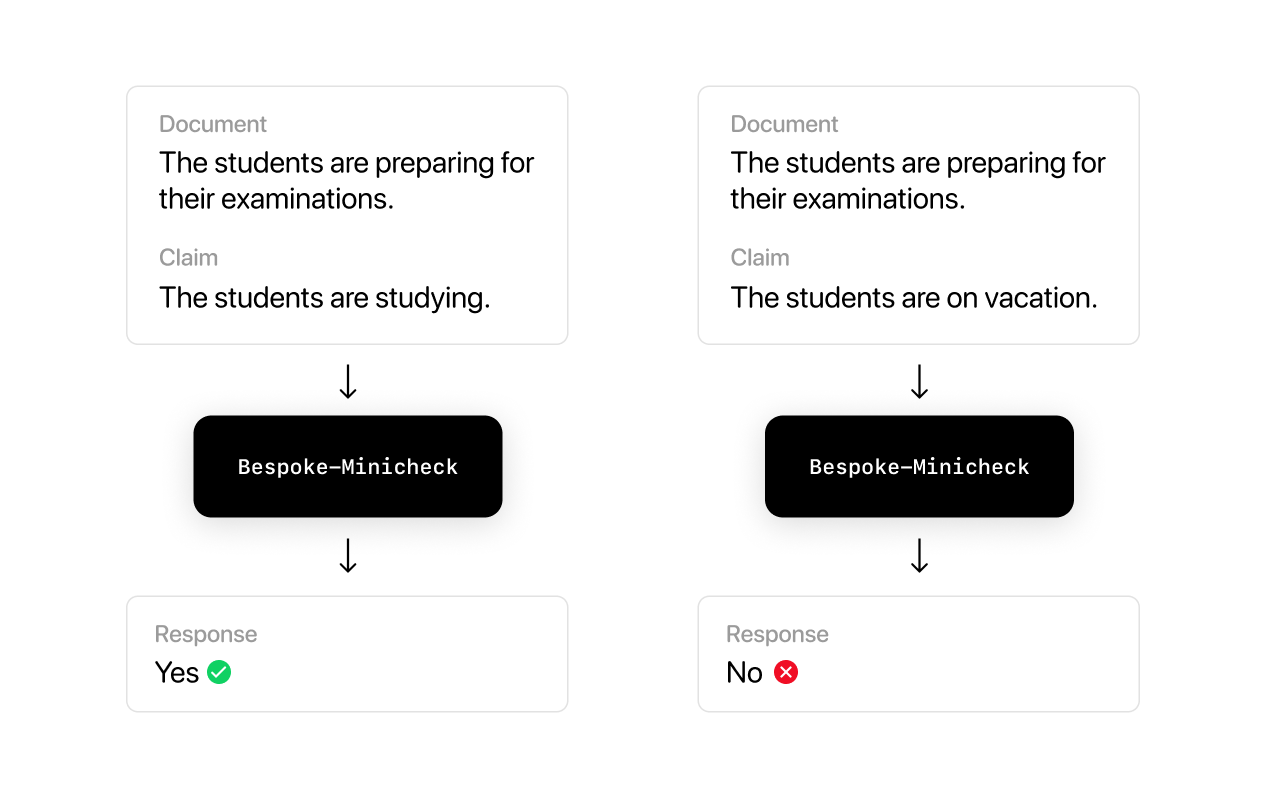

Bespoke-Minicheck works by taking chunks of factual information (i.e. the Document) and generated output (i.e. the Claim) and verifying the claim against the document. If the document supports the claim, the model will output Yes. Otherwise, it will output No:

RAG use case

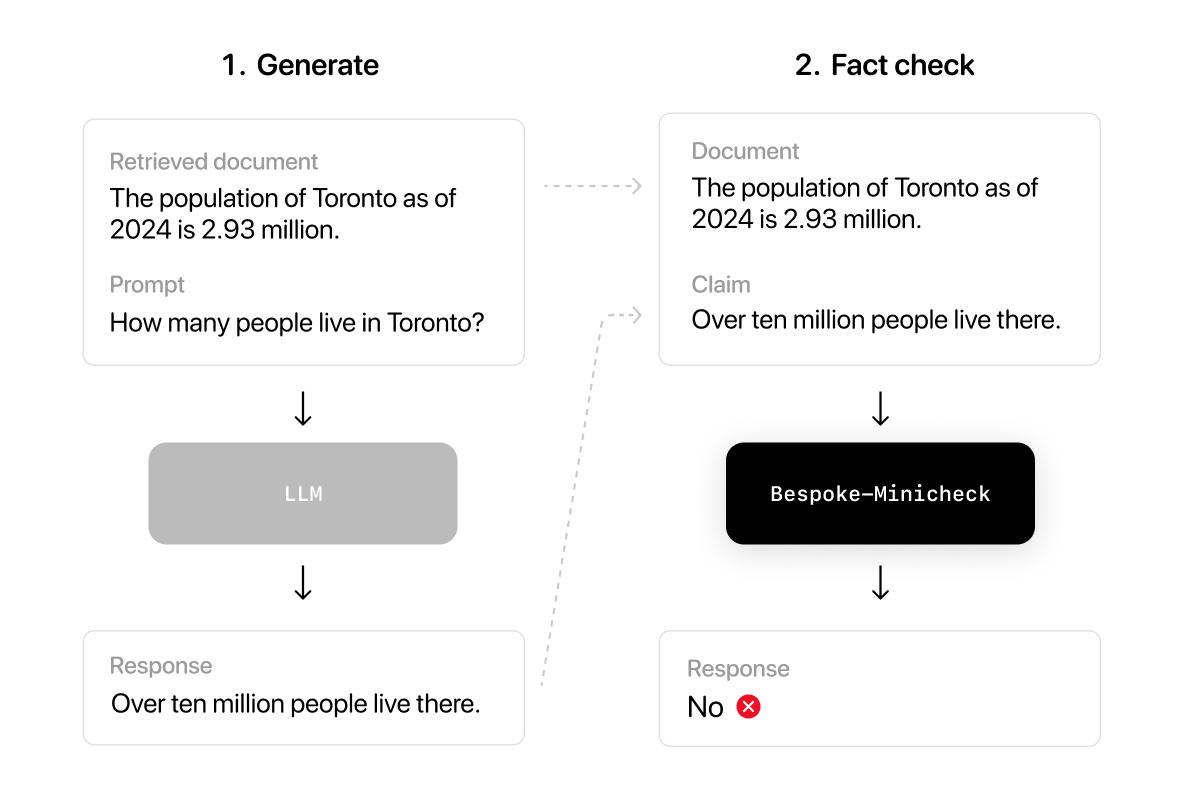

Bespoke-Minicheck is especially powerful when building Retrieval Augmented Generation (RAG) applications, as it can be used to make sure responses are grounded in the retrieved context provided to the LLM. This can be done as a post-processing step to detect hallucinations:

For an example of how to use Bespoke-Minicheck in a RAG application using Ollama, see the RAG example on GitHub.

Getting started

Start by downloading and running the model:

ollama run bespoke-minicheck

Next, write the prompt as follows, providing both the source document and the claim:

Document: A group of students gather in the school library to study for their upcoming final exams.

Claim: The students are preparing for an examination.

Since the source information supports the claim, the model will output Yes.

Yes

However, when the claim is not supported by the document, the model will respond with No:

Document: A group of students gather in the school library to study for their upcoming final exams.

Claim: The students are out on vacation

No

For an example on how to use Bespoke-Minicheck to fact check a claim against source information using Ollama, see Fact checking example on GitHub.