10 Downloads Updated 3 days ago

ollama run baichuan-inc/baichuan-m3-235b:q4_k_m

Details

Updated 3 days ago

3 days ago

fac52890ab60 · 144GB ·

Readme

Baichuan-M3-235B

From Inquiry to Decision: Building Trustworthy Medical AI

🏥 Experience AI-Powered Medical Inquiry: ying.ai

🌟 Model Overview

Baichuan-M3 is Baichuan AI’s new-generation medical-enhanced large language model, a major milestone following Baichuan-M2.

In contrast to prior approaches that primarily focus on static question answering or superficial role-playing, Baichuan-M3 is trained to explicitly model the clinical decision-making process, aiming to improve usability and reliability in real-world medical practice. Rather than merely producing “plausible-sounding answers” or high-frequency vague recommendations like “you should see a doctor soon,” the model is trained to proactively acquire critical clinical information, construct coherent medical reasoning pathways, and systematically constrain hallucination-prone behaviors.

Core Highlights

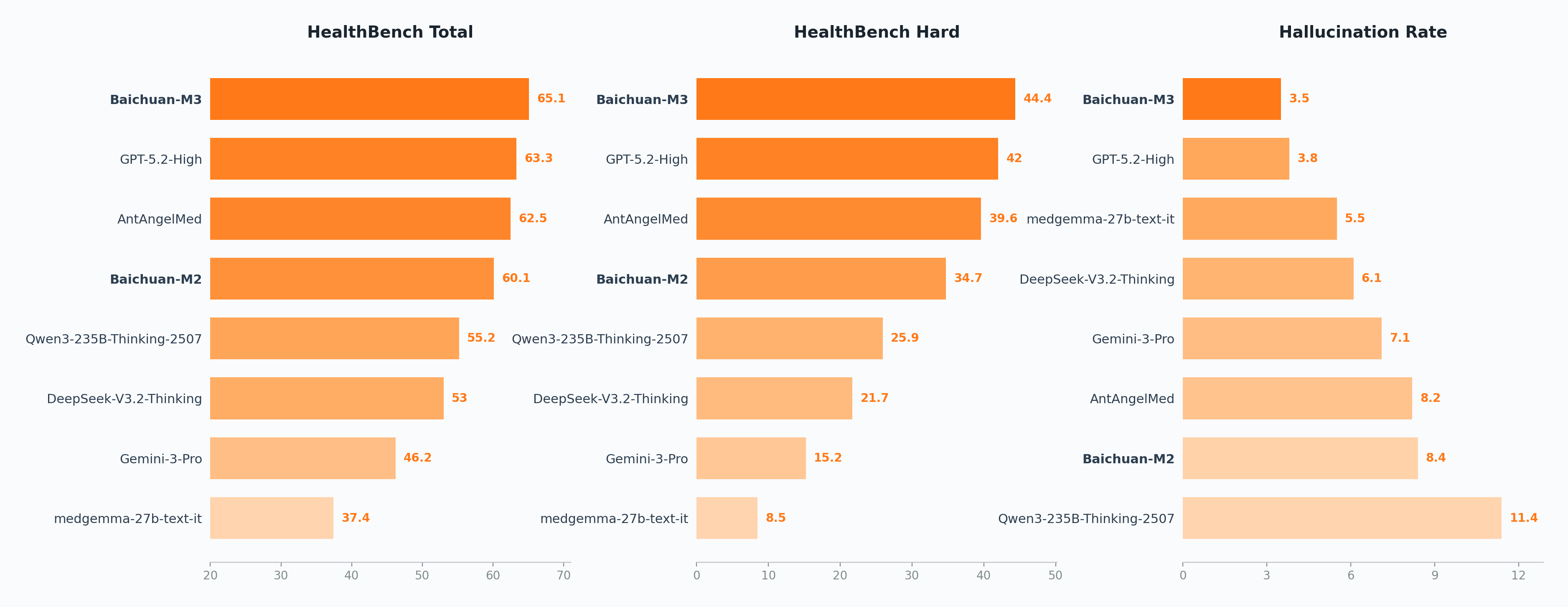

- 🏆 Surpasses GPT-5.2: Outperforms OpenAI’s latest model across HealthBench, HealthBench-Hard, hallucination evaluation, and SCAN-bench, establishing a new SOTA in medical AI

- 🩺 High-Fidelity Clinical Inquiry: The only model to rank first across all three SCAN-bench dimensions—Clinical Inquiry, Laboratory Testing, and Diagnosis

- 🧠 Low Hallucination, High Reliability: Achieves lower hallucination rate than GPT-5.2 through Fact-Aware RL, even without external tools

- ⚡ Efficient Deployment: W4 quantization reduces memory to 26% of original; Gated Eagle3 speculative decoding achieves 96% speedup

📊 Performance

HealthBench & Hallucination Evaluation

HealthBench is OpenAI’s authoritative medical benchmark, constructed by 262 practicing physicians from 60 countries, comprising 5,000 high-fidelity multi-turn clinical conversations.

Compared to Baichuan-M2, Baichuan-M3 improves by 28 percentage points on HealthBench-Hard, reaching 44.4 and surpassing GPT-5.2. It also ranks first on the HealthBench Total leaderboard.

For hallucination evaluation, we decompose long-form responses into fine-grained, verifiable atomic medical claims and validate each against authoritative medical evidence. Even in a tool-free setting, Baichuan-M3 achieves lower hallucination rate than GPT-5.2.

SCAN-bench Evaluation

SCAN-bench is our end-to-end clinical decision-making benchmark that simulates the complete clinical workflow from patient encounter to final diagnosis, evaluating models’ high-fidelity clinical inquiry capabilities through three stations: History Taking, Ancillary Investigations, and Final Diagnosis.

Baichuan-M3 ranks first across all three core dimensions, outperforming the second-best model by 12.4 points in Clinical Inquiry.

📢 The SCAN-bench will be open-sourced soon. Stay tuned.

🔬 Technical Features

📖 For detailed technical information, please refer to: Tech Blog

SPAR: Segmented Pipeline Reinforcement Learning

To address reward sparsity and credit assignment challenges in long clinical interactions, we propose SPAR (Step-Penalized Advantage with Relative baseline): it decomposes clinical workflows into four stages—history taking, differential diagnosis, laboratory testing, and final diagnosis—each with independent rewards, combined with process-level rewards for precise credit assignment, driving the model to construct auditable and complete decision logic.

Fact-Aware Reinforcement Learning

By integrating factual verification directly into the RL loop, we build an online hallucination detection module that validates model-generated medical claims against authoritative medical evidence in real-time, supported by efficient caching mechanisms for online RL training. A dynamic reward aggregation strategy adaptively balances task learning and factual constraints based on the model’s capability stage, significantly enhancing medical factual reliability without sacrificing reasoning depth.

Efficient Training and Inference

Adopts a three-stage multi-expert fusion training paradigm (Domain-Specific RL → Offline Distillation → MOPD), combined with Gated Eagle3 speculative decoding (96% speedup) and W4 quantization (only 26% memory) for efficient deployment.

🔧 Quick Start

For deploying the Q4_K_M quantized model, you can use llama.cpp or ollama, please visit their website to get the specific operational steps for deploying the model.

⚠️ Usage Notices

- Medical Disclaimer: For research and reference only; cannot replace professional medical diagnosis or treatment

- Intended Use Cases: Medical education, health consultation, clinical decision support

- Safe Use: Recommended under guidance of medical professionals

📄 License

Licensed under the Apache License 2.0. Research and commercial use permitted.

🤝 Acknowledgements

- Base Model: Qwen3

- Training Framework: verl

- Inference Engines: vLLM, SGLang

Thank you to the open-source community. We commit to continuous contribution and advancement of healthcare AI.

📞 Contact Us

- Official Website: Baichuan AI

- Technical Support: GitHub

📚 Citation

@article{Baichuan-M3 Technical Report,

title={Baichuan-M3: Modeling Clinical Inquiry for Reliable Medical Decision-Making},

author={Baichuan-M3 Team: Chengfeng Dou, Fan Yang, Fei Li, Jiyuan Jia, Qiang Ju, Shuai Wang, Tianpeng Li, Xiangrong Zeng, Yijie Zhou, Hongda Zhang, Jinyang Tai, Linzhuang Sun, Peidong Guo, Yichuan Mo, Xiaochuan Wang, Hengfu Cui, Zhishou Zhang},

journal={arXiv preprint arXiv:2602.06570},

year={2026}

}